Databricks Certified Machine Learning Professional: DATABRICKS-MACHINE-LEARNING-PROFESSIONAL

Want to pass your Databricks Certified Machine Learning Professional DATABRICKS-MACHINE-LEARNING-PROFESSIONAL exam in the very first attempt? Try Pass2lead! It is equally effective for both starters and IT professionals.

- Vendor: Databricks

- Exam Code: DATABRICKS-MACHINE-LEARNING-PROFESSIONAL

- Exam Name: Databricks Certified Machine Learning Professional

- Certifications: ML Data Scientist

- Total Questions: 60 Q&As

- Updated on: Dec 16, 2024

- Note: Product instant download. Please sign in and click My account to download your product.

- Q&As Identical to the VCE Product

- Windows, Mac, Linux, Mobile Phone

- Printable PDF without Watermark

- Instant Download Access

- Download Free PDF Demo

- Includes 365 Days of Free Updates

VCE

- Q&As Identical to the PDF Product

- Windows Only

- Simulates a Real Exam Environment

- Review Test History and Performance

- Instant Download Access

- Includes 365 Days of Free Updates

Passing Certification Exams Made Easy

Everything you need prepare and quickly pass the tough certification exams the first time

- 99.5% pass rate

- 7 Years experience

- 7000+ IT Exam Q&As

- 70000+ satisfied customers

- 365 days Free Update

- 3 days of preparation before your test

- 100% Safe shopping experience

- 24/7 Support

Databricks DATABRICKS-MACHINE-LEARNING-PROFESSIONAL Last Month Results

Free DATABRICKS-MACHINE-LEARNING-PROFESSIONAL Exam Questions in PDF Format

Related ML Data Scientist Exams

DATABRICKS-MACHINE-LEARNING-PROFESSIONAL Online Practice Questions and Answers

Questions 1

A machine learning engineer is migrating a machine learning pipeline to use Databricks Machine Learning. They have programmatically identified the best run from an MLflow Experiment and stored its URI in the model_uri variable and its Run ID in the run_id variable. They have also determined that the model was logged with the name "model". Now, the machine learning engineer wants to register that model in the MLflow Model Registry with the name "best_model". Which of the following lines of code can they use to register the model to the MLflow Model Registry?

A. mlflow.register_model(model_uri, "best_model")

B. mlflow.register_model(run_id, "best_model")

C. mlflow.register_model(f"runs:/{run_id}/best_model", "model")

D. mlflow.register_model(model_uri, "model")

E. mlflow.register_model(f"runs:/{run_id}/model")

Questions 2

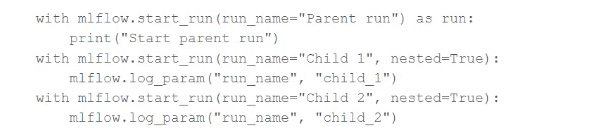

A data scientist is using MLflow to track their machine learning experiment. As a part of each MLflow run, they are performing hyperparameter tuning. The data scientist would like to have one parent run for the tuning process with a child run for each unique combination of hyperparameter values.

They are using the following code block:

The code block is not nesting the runs in MLflow as they expected.

Which of the following changes does the data scientist need to make to the above code block so that it successfully nests the child runs under the parent run in MLflow?

A. Indent the child run blocks within the parent run block

B. Add the nested=True argument to the parent run

C. Remove the nested=True argument from the child runs

D. Provide the same name to the run_name parameter for all three run blocks

E. Add the nested=True argument to the parent run and remove the nested=True arguments from the child runs

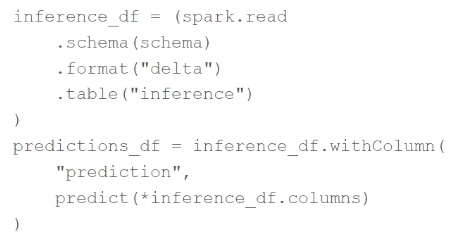

Questions 3

A machine learning engineer is using the following code block as part of a batch deployment pipeline:

Which of the following changes needs to be made so this code block will work when the inference table is a stream source?

A. Replace "inference" with the path to the location of the Delta table

B. Replace schema(schema) with option("maxFilesPerTrigger", 1)

C. Replace spark.read with spark.readStream

D. Replace format("delta") with format("stream")

E. Replace predict with a stream-friendly prediction function

Reviews

-

Yesterday, I passed the exam with unexpected score with the help of this dumps. Thanks for this dumps.Recommend strongly.

-

Wonderful study material. I used this material only half a month, and eventually I passed the exam with high score. The answers are accurate and detailed. You can trust on it.

-

Passed exam today with 989/1000. All questions were from this dumps. It's 100% valid. Special thanks to my friend Lily.

-

This dumps is enough to pass exam.There are many new questions and some modified questions.Good luck to you all.

-

Thanks god and thank you all. 100% valid. all the other questions are included in this file.

-

yes, i passed the exam in the morning, thanks for this study material. Recommend.

-

Great dumps ! Thanks a million.

-

Paas my exam today. Valid dumps. Nice job!

-

Very good dumps, take full use of it, you will pass the exam just like me.

-

Hello, guys. i have passed the exam successfully in the morning,thanks you very much.

Printable PDF

Printable PDF